As an AI expert witness and consultant, I’ve had a front-row seat to the remarkable evolution of artificial intelligence. As we approach the end of 2024, several developments justify attention from legal professionals working in intellectual property and technology law.

The Turing Test: A Legal Benchmark?

In 1950, Alan Turing proposed the ‘Imitation Game’ – now known as the Turing test – as a benchmark for machine intelligence. Recent research yields a fascinating insight: GPT-4, which is now somewhat dated in the rapidly evolving AI landscape, can pass as human in 54% of interactions. For context, human participants achieve a 67% recognition rate on the same test, while the classic ELIZA system manages only 22%. These findings may have significant implications for IP law, particularly in areas concerning AI-generated works.

Four Pillars of AI Advancement

Currently, the ongoing AI revolution is dependent on four “pillars”:

- Training Data Availability

- Algorithm Refinement

- Semiconductor Technology Progress

- Power Infrastructure

Historically, algorithmic breakthroughs have driven the most significant advances in AI, though as we will see, power infrastructure is coming to the forefront. The transformer architecture, introduced in Google’s 2017 paper Attention Is All You Need, exemplifies the algorithm improvement which extends all the way back to Rosenblatt’s perceptron. While first viewed as merely interesting academic work, Attention Is All You Need’s true potential emerged with GPT-3 in 2020, showing unprecedented scalability and unexpected emergent capabilities.

Emergent Capabilities: New Territory for IP Law

Particularly relevant to patent practitioners are the emergent capabilities AI systems with transformers are showing, including:

- Spontaneous coding abilities without specific programming training.

- Learning to perform new tasks from a few examples.

- Breaking down new problems into steps.

- Suddenly showing competence in untrained areas, such as language models showing mathematical abilities.

The Infrastructure Challenge

The physical implementation of AI systems presents its own set of challenges and opportunities. Modern data centers devoted to training – the new factories of intelligence – require sophisticated semiconductor engineering and robust power infrastructure. The economic viability of these operations varies significantly by geography, primarily due to electricity costs and availability.

[caption id="attachment_577" align="aligncenter" width="944"]

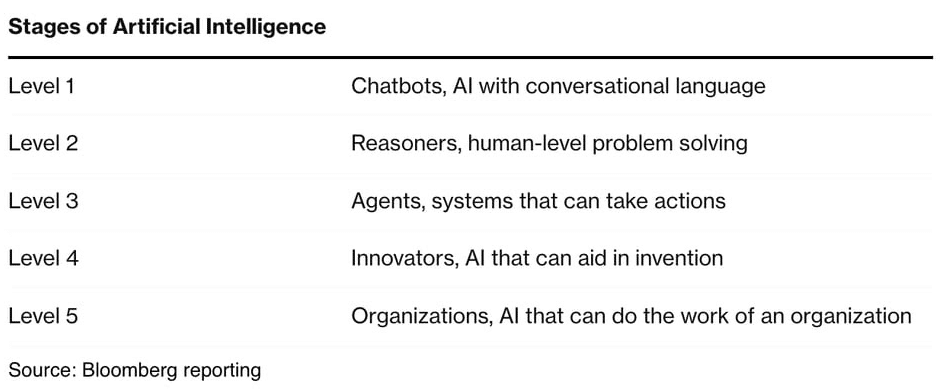

In a recent interview, Sam Altman said the pathway to general intelligence at a human level is now clear: “we actually know what do” to get there. He sees a path from today’s performance to Level 4 in the OAI classification, as defined above. But this progress is dependent on an abundant supply of electrical power.

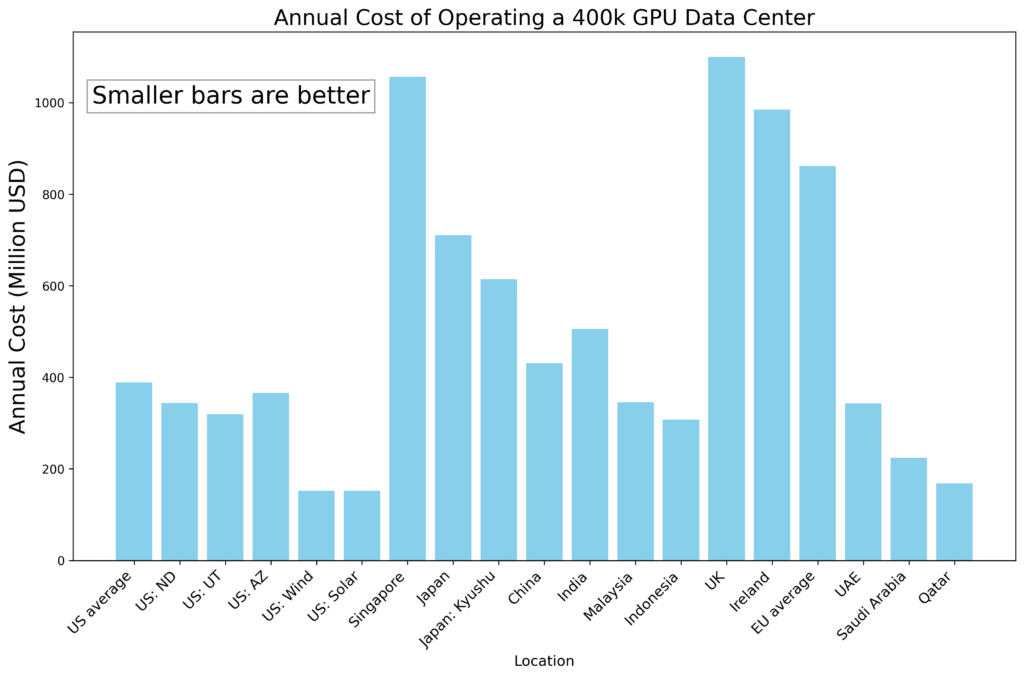

Electricity cost will determine locations of AI factories

The semiconductor, board-level and facilities engineering which goes into data centers (maybe better termed “AI factories”) is impressive. But it all depends on the availability, price and reliability of electric power. I plotted some recent data from Semianalysis to compare parts of the world.

The chart shows the operating cost for an AI factory with 400,000 GPUs. By the end of 2024, Facebook will be running about 350,000 GPUs, while Microsoft is aiming for 1.8 million GPUs.

Conclusion

It’s obvious that AI progress continues to accelerate. IP lawyers will benefit from paying particular attention to (a) AI-generated works and their patentability, (b) emergent capabilities, and (c) motion towards AI agents followed by AI innovators. A critical aspect of continued progress is the availability of large quantities of electric power at reasonable cost.

About the author Dr. Chris Daft is a testifying expert witness and consultant whose areas of expertise include machine learning, integrated circuits, MEMS and medical imaging.

Leave a Reply